Mixed Reality exists in many forms along a continuum from Augmented Reality

(reality enhanced by graphics, such as Sutherland's work from more than 30

years ago) to more recent efforts at Augmented Virtuality

(graphics enhanced by reality, graphics enhanced by video, etc.).

Mixed Reality provides numerous ways to add together (mix)

various proportions of real and virtual worlds.

However, Mediated Reality is an even older tradition, introduced by

Stratton more than 100 years ago. Stratton presented two important ideas:

Virtual Reality, Mediated Reality, Mixed Reality, Modulated Reality,

Modified Reality, Wearable Computing, Personal Imaging, Personal Technologies,

Humanistic Intelligence, Intelligent Image Processing

Illusory transparency is an older concept than,

as well as a generalization of, the notion of video see-through.

Illusory transparency

includes media that do not necessarily involve video.

The concept of

illusory transparency continues to apply to

systems that do not involve video.

Examples of illusory transparency include computational

see-through by way of laser EyeTap

devices, as well as optical computing to produce a similar illusory

transparency under computer program control. With optical computing,

the concept of illusory transparency continues to provide

a distinction between devices that can modify (not just add to)

our visual perception and devices that cannot. Not all reality--modifying

devices involve video, but many still involve the concept of

illusory transparency.

It has been shown that the addition of video signals, in augmented reality,

is fundamentally flawed because cameras operate approximately

logarithmically and displays (including most head mounted displays)

operate anti-logarithmically

(Comparametric

Equations, IEEE Trans. Image Proc., 9(8) August, 2000, pp1389-1406)

Thus video mixers effectively perform something that is closer to

multiplication than it is addition, because they operate on approximately

the logarithms of the underlying true physical quantities of light.

Accordingly, antihomomorphic filters have been proposed for augmented

or mediated reality, wherein this undesirable effect is cancelled

(Intelligent

Image Processing, John Wiley and Sons, 2001) [Mann 01].

The concept of mixing real and virtual worlds exists in a wide variety

of situations in the broadcast, entertainment, audiovisual, and computer

graphics industries, and beyond. Therefore a taxonomy of the different

mixtures of real and virtual information content [Milgram 94, Drascic96],

with particular emphasis on a continuum from augmented reality

to augmented virtuality, is useful.

This taxonomy is especially useful in considering the way in

which real and virtual are combined in head mounted

displays [Milgram 99].

One of the earliest and most important such examples

was the eyeglasses George Stratton built

and wore. His eyewear, made from two lenses of equal focal length,

spaced two focal lengths apart,

was basically an inverting telescope with unity magnification,

so that he saw the world upside-down [Stratton 96, Stratton 97].

These eyeglasses, in many ways, actually diminished his perception of reality.

His deliberately diminished perception of reality was neither

graphics enhanced by video, nor was it video enhanced by graphics,

nor any linear combination of these two.

(Moreover it was an example of optical see-through that is not an

example of registered illusory transparency, e.g. it problematizes the

notion of the optical see-through concept because both the mediation

zone, as well as the space around it, are examples of optical-only

processing.)

Others followed in Stratton's footsteps by living their day-to-day lives

(eating, swimming, cycling, etc.) through left-right reversing

eyeglasses, prisms, and other optics [Kohler 64, Dolezal 82]

that were neither examples of augmented reality nor augmented virtuality

(and for which the optical see-through versus video see-through

distinction fails to properly address important similarities

and differences among various such devices).

Because of the existence of a broad range of devices that modify human

perception, mediated reality, a more general framework that includes

the reality virtuality continuum, as well as devices

for modifying as well as mixing these various aspects of reality and

virtuality has been proposed [Mann 94, Mann 01].

Indeed, mediated reality has been shown to be useful in

deliberately diminishing reality, such as by

filtering out advertisements [Mann and Fung 02]. The HOLZER

(Homographically Obliterating Labels by Zeroing, Enhancement or Replacement)

system is a diminished reality system that filters out billboards and

other advertising material, replacing them with blank spaces or other, more

useful material [Mann and Niedzviecki 01].

Mediated reality relates to Feiner's distinction of virtual reality and

augmented reality as follows:

``whereas virtual reality brashly aims to replace the real world,

augmented reality respectfully supplements it.'' [Feiner 02]

whereas mediated reality modifies it.

Since the 1970s the author has been exploring

electronically mediated environments using body--borne computers.

These explorations in Computer Mediated Reality were

an attempt at creating a new way of experiencing the perceptual world,

using a variety of different kinds of sensors, transducers, and other

body--borne devices controlled by a wearable

computer [Mann 01].

Early on, the author recognized the utility of computer

mediated perception, such as the ability to see in different spectral bands

(Figure 3 below) and to share this computer mediated vision with remote

experts in real time (Figure 4).

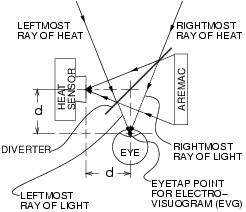

Figure 3: There was no doubt that Mediated Reality had practical uses.

(at left) Author (looking down at the mop he is holding)

wearing a thermal EyeTap wearable computer

system for seeing heat. This device {\em modified}

the author's visual perception of the world,

and also allowed others to communicate with the

author by modifying his visual perception.

A bucket of 500 degree asphalt is present in the foreground.

(at right) Thermal EyeTap principle of operation:

Rays of thermal energy that would otherwise pass through

the center of projection of the eye (EYE)

are diverted by a specially made 45 degree ``hot mirror''

(DIVERTER) that reflects heat, into a heat sensor.

This effectively locates the heat sensor at the center

of projection of the eye (EYETAP POINT).

A computer controlled

light synthesizer (AREMAC) is controlled by a wearable computer

to reconstruct rays of heat

as rays of visible light that are each collinear with the

corresponding ray of heat. The principal point on the

diverter is equidistant to the center of the iris of the eye

and the center of projection of the sensor (HEAT SENSOR).

(This distance, denoted ``d'', is called the eyetap distance.)

The light synthesizer (AREMAC) is also used to draw on the

wearer's retina, under computer program control, to facilitate

communication with (including annotation by) a remote roofing

expert.

Figure 4: Practical application of collaborative Computer Mediated Reality:

(leftmost) First person perspective as captured by the EyeTap device.

Author's hands are visible grasping the mop.

(left of middle)

Mop and hot asphalt as viewed through wearer's right eye.

(middle) After mopping hot asphalt onto the roof surface,

a base sheet is rolled down (bucket of hot asphalt shows as

white in the upper right area of the frame).

(right of middle)

The thermal EyeTap is useful for ``seeing through'' the top layers

of felt or fiberglass, to determine heat flow underneath.

(rightmost) The kettle (upper right of frame) shows up as white

(approx. 500 degrees) whereas the

propane cylinder (bottom of frame) and the propane hose

supplying it show up as black, because the cylinder and hose

are cold due to the expansion of the propane gas

(Joule Thomson effect).

The thermal EyeTap was also useful when the kettle caught on

fire because of its ability to see through smoke. Kettle fires

are easy to extinguish (simply by slamming the lid shut)

if the kettle can be seen through the thick black smoke given

off by the burning asphalt.

Such devices can be used to modify the visual perception of reality within

certain mediation zones (e.g. only one eye rather than both eyes, or

only a portion of that eye), giving rise to {\em partially

mediated realitly}~\cite{intelligentimageprocessing}. Moreover

these devices can also be worn with prescription eyeglass lenses,

or even have prescription eyeglass lenses incorporated into the design.

Prescription eyeglasses are themselves partial reality mediators,

having a peripheral zone, a

transition zone (frames or lens edges), and

one or more {\em mediation zones} (one or more lenses, or lens zones as in

bifocal, trifocal, etc., lenses).

Ecological origins of mediated reality

An important element of Stratton's work was

that he wore the device in his ordinary everyday life.

If performed on other subjects, such

work might have far outstripped the ability of university ethics committees,

the protocols required of ``informed consent'', and the tendency for many

academics to work in labs, controlled spaces, and existing literature.

Unlike traditional scientific experiments that take place in a controlled

lab-like setting (and therefore do not always translate well into the real

world), Stratton's approach required a continuous rather than intermittent

commitment. For example, he would remove the eyewear only to bathe or

sleep, and he even kept his eyes closed during bathing, to ensure that no

un-mediated light from the outside world could get into his eyes

directly [Stratton 96, Stratton 97].

This work involved a commitment on his part, to devote his very existence --

his personal life -- to science.

Stratton captured a certain important human element in his broad seminal

work, which laid the foundation for others to later do carefully controlled

lab experiments within narrower academic disciplines.

Moreover, his approach was one that broke down the boundaries between work

and leisure activity, as well as the boundaries between the laboratory and

the real world.

Similarly, it is desired to integrate computer mediated reality into daily life.

The past 22 years of wearing computerized reality mediators in everyday life

has provided the author with some insight into some of the sociological

factors such as how others react to such devices~\cite{cyborg}.

In particular, this has given rise to a desire to design and build

reality mediators that do not have an unusual appearance.

Reality Mediators for everyday life

Typical virtual reality headsets, and other cumbersome devices are not well

suited to ordinary everyday life because of the bulky constrained

and tethered operation, as well as their unusual appearance.

Indeed, it is preferable that commonly used reality mediators, such as hearing

aids and personal eyeglasses must have an unobtrusive (or hidden) appearance,

or be designed to be sleek, slender, and fashionable.

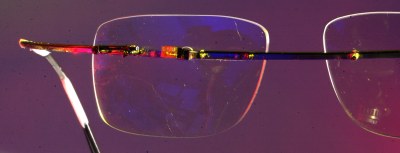

Figure 5 shows how miniaturization of components makes this evolution possible.

Figure 5: (at left) EyeTap devices, such as this infrared night vision

computer system, when the components are visible, tend to

have a frightening appearance, owing to the ``glass eye'' effect,

in which optics, projected to the center of projection of a lens

of the tapped eye are visible to others. Here we see the image

of the infrared camera located in the center of the author's

right eye.

(at right) Author's recent (1996) reality mediator design

with systems built into dark glasses tends to mitigate this

undesirable social effect. The eyeglass lenses are also transparent

in the infrared, allowing the night vision portion of the apparatus

to take over in low light, without loss of gain. In the visible

portion of the spectrum, a 10dB loss of sensitivity is incurred

to conceal the color sensor elements and optics.

The author's wearable computer reality mediators have evolved from

headsets of the 1970s, to eyeglasses with optics outside the glasses

in the 1980s, to eyeglasses with the optics built inside the glasses in the

1990s [Mann 01] to eyeglasses with mediation zones built into

the frames, lens edges, or the cut lines of bifocal lenses

in the year 2000 (e.g. exit pupil and associated optics concealed by

the transition regions).

Reality mediators that have the capability to measure and resynthesize

electromagnetic energy that would otherwise pass through the center of

projection of a lens of an eye of a user, such as shown

in Figures 3, 4, and 5,

are referred to as EyeTap [Mann 01] devices.

These devices divert at least a portion of eyeward bound light into a

measurement system that measures how much light would have entered the

eye in the absence of the device. Some eyetap devices

use a focus control to reconstruct light in a depth plane that

moves to follow subject matter of interest. Others

reconstruct light in a wide range of depth planes,

in some cases having infinite or near infinite depth of field.

Reality mediators for everyday life

Even a very small size optical element, when placed within the

open area of an eyeglass lens, looks unusual.

Thus eyeglasses having display optics embedded in an eyeglass lens,

such as those made by Microoptical (http://www.microopticalcorp.com),

still appear unsual. In normal conversation, people tend to look one-another

right in the eye, and therefore will notice even the slightest speck of

dust on an eyeglass lens.

Therefore, the author has proposed that any intermediary elements

to be installed in an eyeglass lens be installed in the transition

zones, e.g. as transfer functions between peripheral

vision and the eyeglass lens itself (e.g. in the frames), or as

the transfer functions between different portions of a

multifocal (bifocal, trifocal, etc.) eyeglass lens.

Consider first the implementation of a reality mediator as part of

transfer function of the glass to glass transition region

of bifocal eyeglasses.

In the case in which a monocular version of

the apparatus is being used, the apparatus is built into

one lens, and a dummy version of the diverter

portion of the apparatus is positioned in the other lens for

visual symmetry.

It was found that such an arrangement tended to call less attention

to itself than when only one diverter was used for a monocular implementation.

These diverters may be integrated into

the lenses in such a manner to have the appearance of

the lenses in ordinary bifocal eyeglasses.

Where the wearer does not require bifocal lenses, the cut line in the

lens can still be made, such that transfer function simply

defines a transition between two lenses of equal focal length.

Transition zone reality mediators:

Reversal of the roles of eyeglass frames and eyeglass lenses

The size of the mediation zone that can be concealed in the cut-line of

a bifocal eyeglass lens is somewhat limited.

The peripheral transfer function (e.g. at the edges of the glass,

or the eyeglass frame boundaries) provides a more ideal location

for a partial reality mediator, because the device can be concealed directly

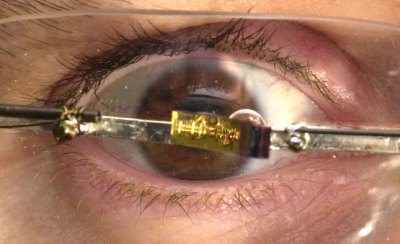

within the frames of the eyeglasses, as shown in Figure 6 below.

Reality mediator incorporated into the eyeglass frames:

Upon close inspection with the unaided eye, the eyeglasses have a normal

appearance.

Using a magnifier, macro lens, etc., we can see the diverter

when magnified in an extreme close-up.

Side view shows sleek and slender design, where apparatus can be hidden

in hair, at back of wearer's head.

In view of such a concealment opportunity, the author envisioned a new kind

of eyeglass design in which the frames would come right through the center

of the visual field. With materials and assistance provided by Rapp

optical, eyeglass frames were assembled using standard

photochromic prescription lenses drilled in two places on the left eye,

and four places on the right eye, to accommodate a break in the

eyeglass frame along the right eye (the right lens being held on with

two miniature bolts on either side of the break).

The author then bonded fiber optic bundles concealed by the frames,

to locate the camera and aremac in back of the device.

This research prototype proves the

viability of using eyeglass frames as a mediating element.

The frames being slender enough (e.g. two millimeters

wide) do not appreciably interfere with normal vision, being close enough

to the eye to be out of focus.

But even if the frames were wider, they can be made out of a see-through

material, or they can be seen through by way of the illusory

transparency afforded by the EyeTap principle [Mann 01].

Therefore, there is definite merit in seeing the world trough eyeglass frames.

In particular, the eyeglasses of Figure 6

were crude and simple. A more sophisticated design could use a plastic

coating to completely conceal all the elements, so that even when

examined under a microscope, evidence of the EyeTap would not be visible.

Conclusions and further research

Wearable Computer Mediated Reality was presented as a new framework for

visual reality modification in everyday life.

In particular, a new form of partial reality mediator having the

appearance of a new kind of stylish eyeglasses, and suitable for

use in ordinary life, was presented.

In this design, the roles of eyeglass lenses and eyeglass frames are reversed.

The eyeglass lenses become the decorative element, whereas the eyeglass

frames become the element that the wearer sees through.

Acknowledgements

The author would like to thank Mel Rapp, of Toronto-based

Rapp Optical, for assistance in recovering from an

unfortunate incident that had involved the damage of the

author's eyeglasses, and for providing the author much in the

way of motivation to persevere through these difficult times.

References

Caudell, T. and Mizell, D. (1992),

"Augmented Reality: An Application of Heads-Up Display Technology to

Manual Manufacturing Processes."

Proc. Hawaii International Conf. on Systems Science, Vol. 2, pp 659--669.

Dolezal, H. (1982),

"Living in a world transformed."

Academic press series in cognition and perception,

Academic press, Chicago, Illinois.

Drascic, D. and Milgram, P. (1996).

"Perceptual Issues in Augmented Reality."

SPIE Volume 2653: Stereoscopic Displays and Virtual Reality Systems III,

San Jose, February, pp. 123-134.

Feiner, S. (2002)

"Augmented Reality: A New Way of Seeing."

Scientific American,

Apr 2002, pp. 52-62.

Kohler, I. (1964),

"The formation and transformation of the perceptual world."

Psychological issues, International university press,

227 West 13 Street, 3(4), monograph 12.

Mann, S. (1994),

"Mediated Reality.

" M.I.T. M.L. Technical Report 260, Cambridge, Massachusetts.

Mann, S. (2001),

"Intelligent

Image Processing. " John Wiley and Sons, 384pp ISBN: 0-471-40637-6.

Mann, S. and Fung, J. (2002),

"EyeTap devices for augmented, deliberately diminished, or otherwise

altered visual perception of rigid planar patches of real world scenes."

PRESENCE, 11 (2), pp 158--175, MIT Press.

Mann, S. with Niedzviecki, H. (2001),

"Cyborg:

Digital Destiny and Human Possibility in the Age of the Wearable

Computer." Randomhouse (Doubleday), 288pp, ISBN: 0-385-65825-7.

Milgram, P. (1994)

"AUGMENTED REALITY: A CLASS OF DISPLAYS ON

THE REALITY-VIRTUALITY CONTINUUM."

SPIE Vol. 2351, Telemanipulator and Telepresence Technologies

Milgram, P. and Colquhoun, H. (1999),

"A framework for relating head mounted displays to mixed reality displays",

Proceedings of the human factors and ergonomics society,

43rd annual meeting, San Jose, pp123-134.

Starner, T., Mann, S., Rhodes, B., Levine, J., Healey, J., Kirsch, D.,

Picard, R., and Pentland, A. (1997),

"Augmented Reality Through Wearable Computing."

PRESENCE, 6(4), MIT Press.

Steuer, J (1992)

"Defining Virtual Reality: Dimensions Determining Telepresence."

Journal of Communication, 42(4), pp 73-93.

Stratton, G. (1896),

"Some preliminary experiements on vision."

Psychological Review.

Stratton, G. (1897),

"Vision without inversion of the retinal image."

Psychological Review.

Sutherland, I. (1968),

"A head-mounted three dimensional display."

Proc. Fall Joint Computer Conference, Thompson Books,

Wash. D.C., pp757-764.

Tamura, H., Yamamoto, H., and Katayama, A. (2002),

"Role of Vision and Graphics in Building a Mixed Reality Space."

MR Systems Laboratory, Canon Inc.,

Proc. Int. Workshop on Pattern Recognition and Image

Understanding for Visual Information, pp1-8.