TEI Studio-workshop 2015:

Wearable Computing with 3D Augmediated Reality, Digital Eye Glass,

Egography (Egocentric/First-Person Photographic/Videographic Gesture

Sensing), and Veillance

Wearable Computing, 3D Aug* Reality, Photographic/Videographic Gesture Sensing, and Veillance, as published in ACM Digital Libary: link.

Talk slides from introduction

Friday, 16 January, 2015, Stanford University, at TEI2015

Organizers:

- Steve Mann, widely recognized as "The Father of Wearable Computing"

(IEEE ISSCC2000), who "persisted in his vision and ended up founding a new discipline" (Negroponte, 2001);

- Steve Feiner, Augmented Reality pioneer, Elected to the CHI Academy.

Recipient of IEEE VGTC 2014 Virtual Reality Career Award,

ACM UIST 2010 Lasting Impact Award, and best paper awards at ACM UIST,

ACM CHI, ACM VRST, and IEEE ISMAR;

- Stefano Baldassi, Stanford, NYU, and Head of Perceptual Interactions

and User Research at Meta;

- Soren Harner, Stanford, Vice President of Engineering at Meta;

- Jayes Hansen, Hollywood's #1 UI (User-Interface) designer, and creator

of UIs for feature films like Marvel's The Avengers and Iron Man films,

Ender's Game, and The Hunger Games: Catching Fire/Mockinjay Parts 1

and 2.; and

- Ryan Janzen, http://ryanjanzen.ca/

One-day Studio-workshop: Learn the world's

most advanced AR system, Meta Spaceglass, and create games like this:

Website: http://wearcam.org/tei2015/

Registration: http://www.tei-conf.org/15/registration/

PDF: tei2015.pdf

Date: Friday, 16 January, 2015

Background:

The general-purpose wearable multimedia computer has enjoyed a long history

since the 1970s:

.

.

Recently wearable computing has become mainstream:

Wearable computers and Generation-5 Digital Eye Glass easily recognize a

user's own gestures, forming the basis for shared Augmediated Reality.

This

Studio-Workshop presents the latest in wearable AR. Participants will

sculpt 3D objects using hand gestures, print them on a 3D printer, and

create Unity 3D art+games using

computational lightpainting.

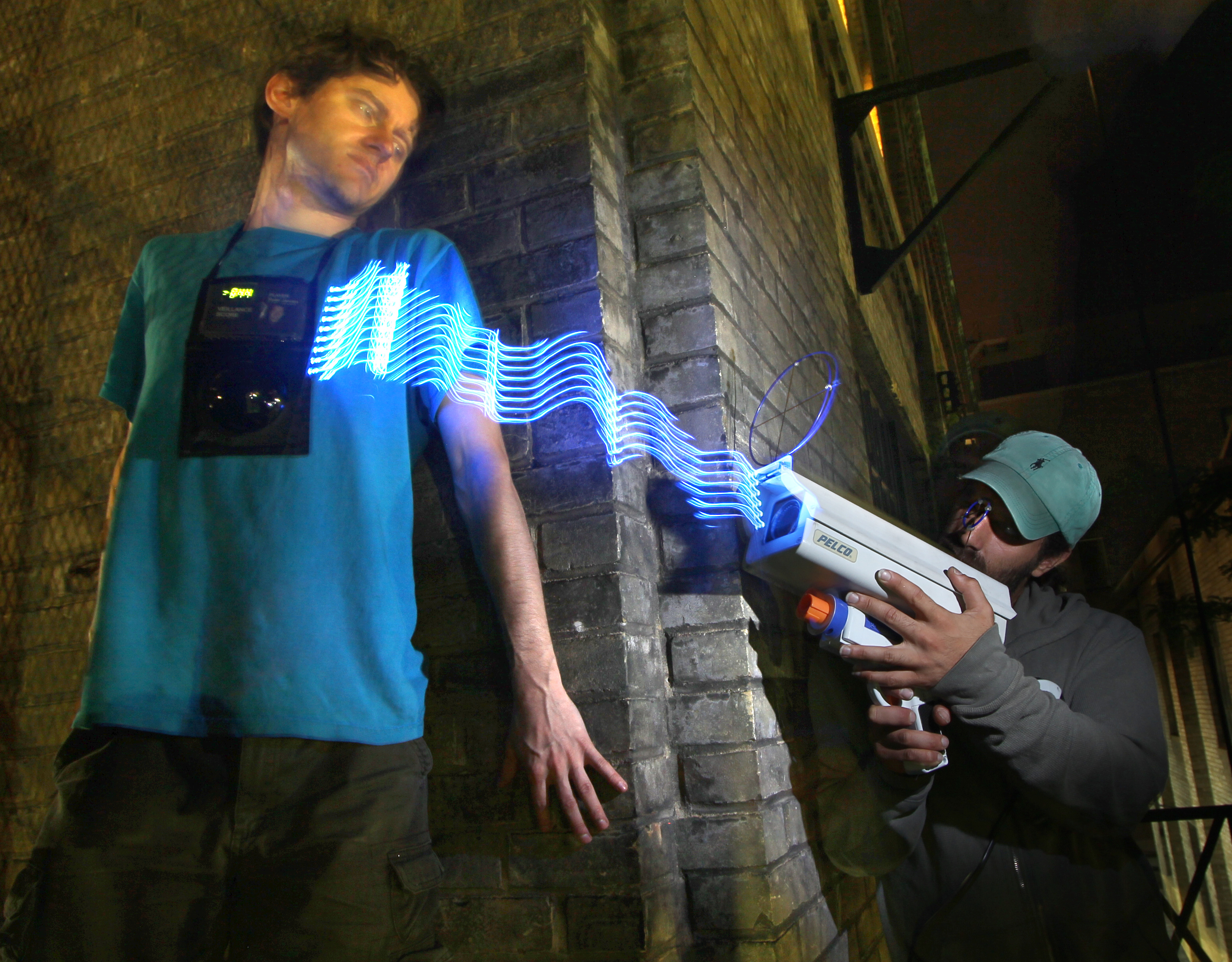

Participants will also learn how to use 3D gesture-based AR to visualize and

understand real-world phenomena, including being able to see sound waves,

see radio waves, and see sight itself (i.e. to visualize vision itself,

through abakographic user-interfaces that interact with the time-reversed

lightfield, also known as the "sightfield"), as shown below:

(Surveillance camera visualized with Meta Spaceglass and the "magic meta wand")

Here's a portrait of Thalmic's Chris Goodine holding

a gun camera in front of the mirror at FITC Wearables;

we can see reflection of "veillance flux" in the mirror:

(click for more from this series)

(click for more from this series)

Find hidden cameras using abakography:

(found a camera hidden in the right eye of a cute stuffed animal)

and visualize the veillance flux of the cameras using 3D AR.

Participants will learn the Meta SDK and how to build multisensory 3D AR

interfaces and Natural User Interfaces including some that interact with the

real world. Concepts taught will include:

- Integral Kinematics introduced through the development of novel 3D AR NUI

fitness apps, including the MannFit System;

- Abakography (3D computational

lightpainting) for the visualization of

sound waves, radio waves, and surveillance flux (sightfields) using

surveilluminescence (lights that change color when they are being

watched by a camera).

Takes place in the Stanford Design Loft space (graduate student design loft)

http://mwdes.com/index.php?/design/stanford-design-loft-redesign/

Abakographs from workshop:

(Click any of these for link to more of them.)

.

.

(click for more from this series)

(click for more from this series)