(link)

(link)

1280x960 576x432 544x408 320x240

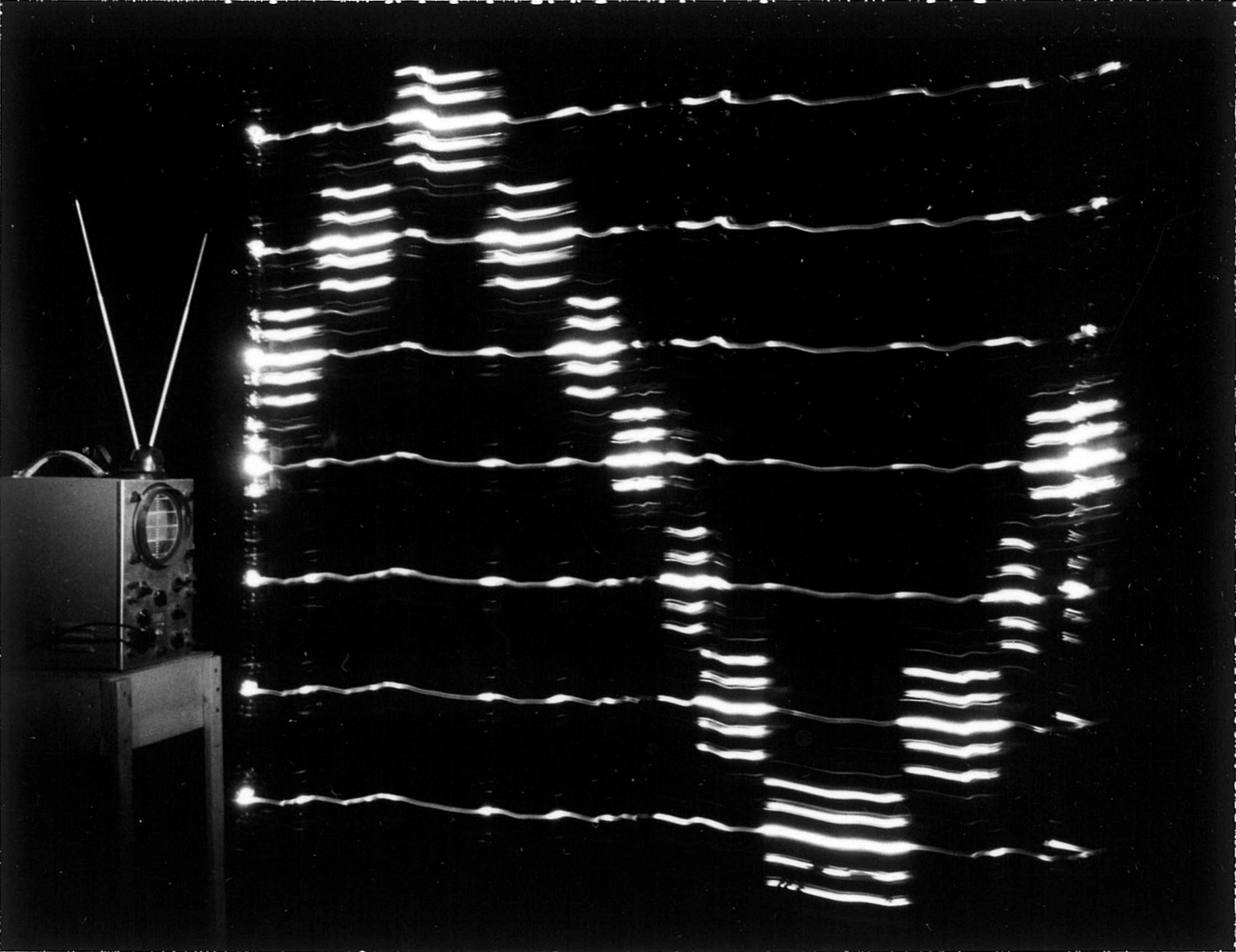

When I was 12 years old, back in the 1970s, I mounted an array of lights to a long wand that I could wave through the air in a darkened room while the lights were driven by a wearable amplifier and switching system I built. This allowed me to sense and receive various things and "display" them on the light stick, so that I could see sound waves, radio waves, and most interestingly, sense sensing itself (e.g. visualize vision from a surveillance camera).

I used to volunteer at a local TV repair shop and gained access to television receivers and television cameras, in the early days of television, where I played around with video feedback and noticed that waving a television receiver around in the dark basement of the TV store would "paint out" the sight lines of a TV camera in the room.

See http://wearcam.org/surveilluminescence_videofeedback_pseudocolor_lowres.jpg

For me this was an important discovery -- I started strapping all sorts of equipment to my body, and building powerful backback-based television receivers with vacuum tubes, and simple logic circuits made with stepping relays and other parts from an old surplus automatic telephone switching equipment.

A meta conversation is a conversation about conversations.

A meta joke is a joke about jokes.

What I was doing was meta-sensing: the sensing of sensors and the sensing of their capacity to sense.

It was a kind of metavision, metaveillance, meta-reality waving lights around in the dark basement where these arrays of lights were essentially 3D extended reality displays. I saw a vision for a future in which everyone could see sound waves, radio waves, television signals, and sight itself, overlaid on top of visual reality.

I called this invention the Sequential Wave Imprinting Machine (S.W.I.M.) and fifteen years later, the S.W.I.M. and its associated "Wearable Computer" formed the basis of my portfolio for my application to the Media Lab at MIT (Massachusetts Institute of Technology) where I was accepted.

In the words of our [Mit Media Lab's] Director and Founder, Nicholas Negroponte:

"Steve Mann ... brought with him an idea... And when he arrived here a lot of people sort of said wow this is very interesting... I think it's probably one of the best examples we have of where somebody brought with them an extraordinarily interesting seed, and then ... it grew, and there are many people now, so called cyborgs in the Media Lab and people working on wearable computers all over the place." -- -- Nicholas Negroponte, Founder, Director, and Chairman, MIT Media Lab "Steve Mann is the perfect example of someone... who persisted in his vision and ended up founding a new discipline." -- -- Nicholas Negroponte, Founder, Director, and Chairman, 1997At MIT in the early 1990s I developed a gesture-based 3D augmented reality system [1].

I named the 3D gesture-based wearable computer, wearable AR/XR systems, etc., "Synthetic Synesthesia of the Sixth Sense". Quite a mouthful to say, so we generally just called it "SixthSense" [2][3].

This I think is the future of wearable computing and AR/XR.

3D gesture-based wearable computing, sensing, and metasensing.

It brings together "Wearables" and IoT (Internet of Things).

Recently we created a Wearables and IoT alliance and conference to explore this future of Wearables and IoT. IoT is sensing -- basically surveillance. Wearables is an opposite of surveillance called sousveillance.

We put these two together to create "Veillance", and a conference on Veillance, along with a defining research paper [Minsky, Kurzweil, Mann 2013] (follow link).

Back in my childhood I formulated a vision of the future in which the world of signals and sensing and information would be merged with the real world. I call this "Fieldary User Interfaces" -- using information fields to interact with the real world in which the virtual world of information and the real world become one in the same.

Thanks to Antonin Kimla, York Radio and TV, for providing mentorship and access to camera and television equipment.

Montage of the above 36 exposures

See also