Modules

There are six modules (corresponding to Chapters 1 to 6 of the textbook),

plus a brief introduction, Module 0.

- Module 0, Introduction:

- Introduction, motivation, and historical context.

- See AWE (Augmented World Expo) 2015 Headline Keynote Address.

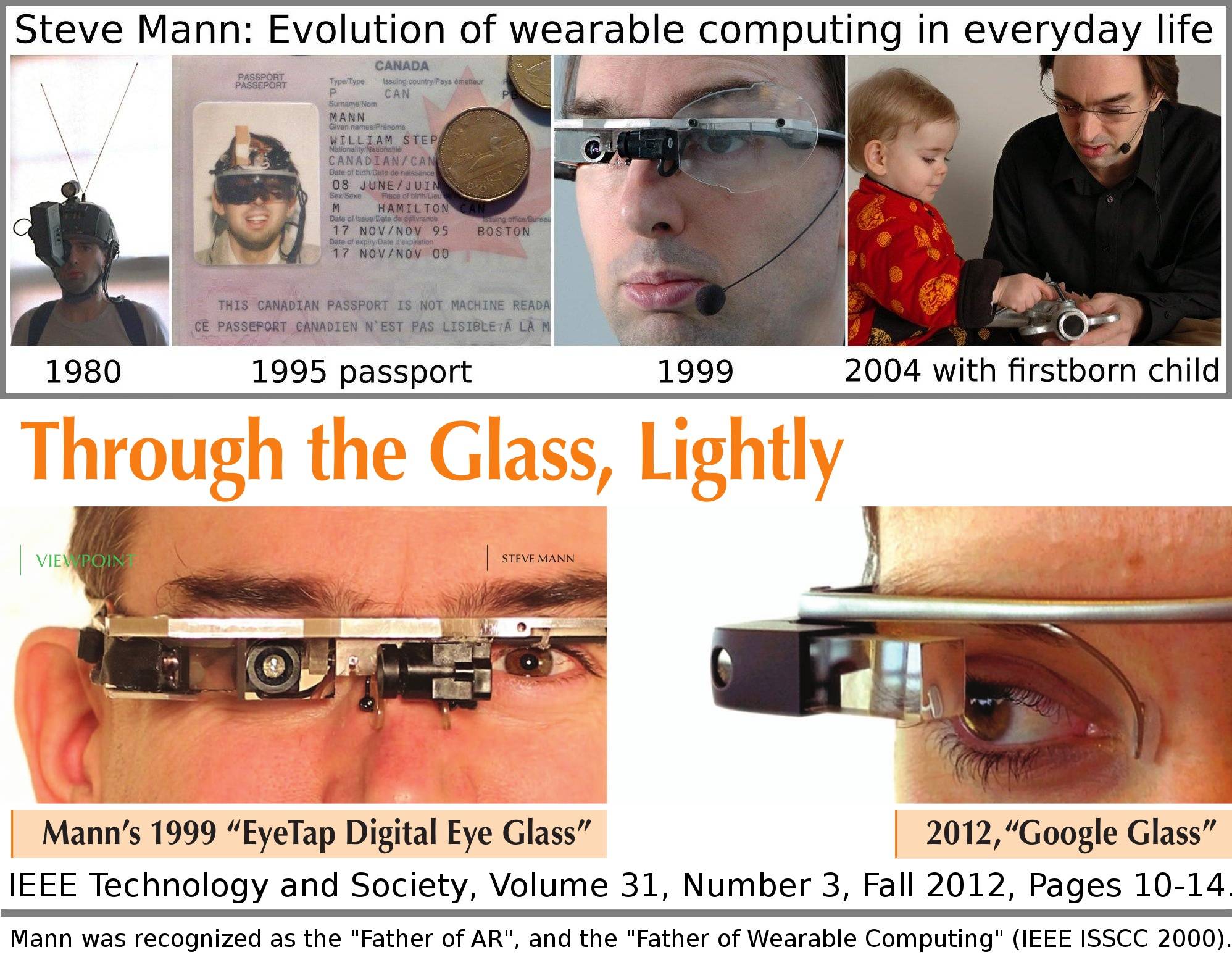

- Wearable Augmented Reality from 1974.

- Case studies, Oculus Rift (2012, 2013), AR versus VR

- See the AR versus VR debate from Augmented World Expo, AWE2015).

- Introduction to some of the companies we (Rotman CDL) have helped bring into existence:

- 2013, Thalmic Labs leveraged CDL to raise majority of seed funding; then $14.5M Series A

- 2014: Bionym developed from fresh startup to major player on the wearable tech scene with help from U of T's Creative Destruction Lab.

- IoT and Wearables: Smart Cites and Wearable Computing as a form of urban design (Urban Scalespace Theory, S. Mann, 2001).

- Capturing the Future.

- Module 1, Personal Cybernetics and

Humanistic Intelligence:

-

Humanistic Intelligence, Mann 1998.

Wearable Computing and IoT (Internet of Things);

The scalespace theory;

sur/sousveillance;

integrity; VeillanceContract;

Humanistic Intelligence;

MedialityAxis?

Overview of Mobile and Wearable Computing, Augmented Reality, and

Internet of Things.

Mann's IEEE T&S 2013 SMARTWORLD (Wearables + IoT + AR alliance).

The fundamental axes of the Wearables + IoT + AR space, Mann 2001.

- Module 2, Personal Imaging:

Wearcam; SmartWatch; 6ense; ARhistory; MedialityAxis; EyeBorg implant; SWIM for phenomenaugmented reality. Module 2 Personal Imaging: Historical overview: 1943, McCollum: Cathode ray tubes in a eyeglass frame 1961, Philco: HMD for remote video surveillance 1968, Ivan Sutherland: Computer graphics and HMD 1970s, M. Krueger: video projection system (non-portable, fixed to walls, etc.) 1970s and 1980s: Mann: Mobile, Wearable Wireless Free-roaming AR: Wearable Computing, Wireless, Sensing, and Metasensing with light bulbs Phenomenal Augmented Reality: Real world physical phenomena as the fundamental basis of mobile and wearable AR. The Silicon Switch: http://www.google.ee/patents/US3452215 The PHENOMENAmplifier and the S.W.I.M. (Mann 1974) Lutron Capri, Joel S Spira US3032688 Publication 1 May 1962 Filing 15 Jul 1959 triac dimmer: 1966, Eugene Alessio, US3452215 philco hmd headsight Comeau Bryan Comeau and Bryan, employees of Philco Corporation, constructed the first actual head-mounted display in 1961 (the theory of a HMD dates back to 1956). sensorama mobile and wearable computing smartwatches contact lens display find triac light dimmer history - Module 3, EyeTap:

The Mediality Axis. Theory of Glass. - Module 4, Comparametric Equations:

Fundamentals of sensing for Wearables + IoT +AR: The 1-pixel camera making a 1-pixel camera; Phenomenaugmented Reality with the 1-pixel camera. Metasensing is HI, case study, Confidence Maps in gesture-based wearable computing. Comparametric Equations and Quantum Field Theory, physics (quantum and classical). - Module 5, Lightspace:

Lightfields, Lytro, dual spaces, nLux, etc.. - Module 6, Light oribts:

Orbits, SLAM, etc.

Course website: http://wearcam.org/ece516/

Our Spaceglasses product won "Best Heads-Up Display" at CES2014.

See a review of our product by Laptop Magazine: http://blog.laptopmag.com/meta-pro-glasses-hands-onOur InteraXon product was said to be the most important wearable of 2014.

Each year we create totally new material to keep the course up-to-date, and we also adapt the course to student interests each year. If there's something you want covered in this course, be sure to let us know!

Assignments from previous years (each one was due at the beginning of lab period):

Assignment 1 for 2015: Fizzveillance Challenge.Assignment 2 for 2015: Make a double-exposure picture.

Assignment 3 for 2015: Make a 1-pixel camera. Described in more detail in lecture of Monday 2015feb2.

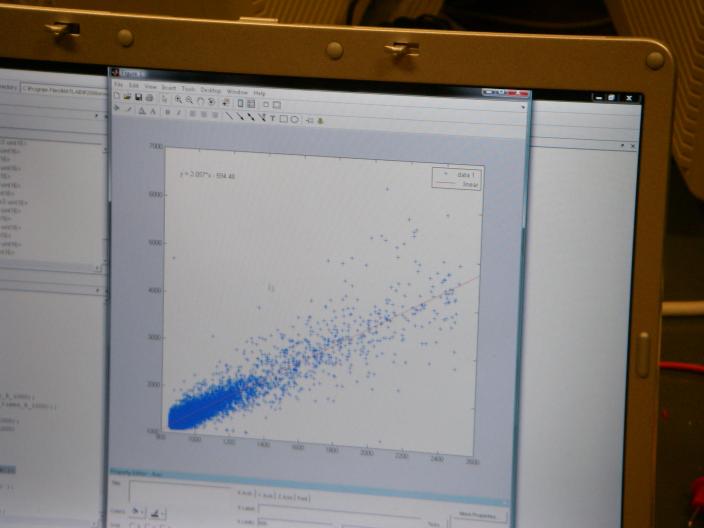

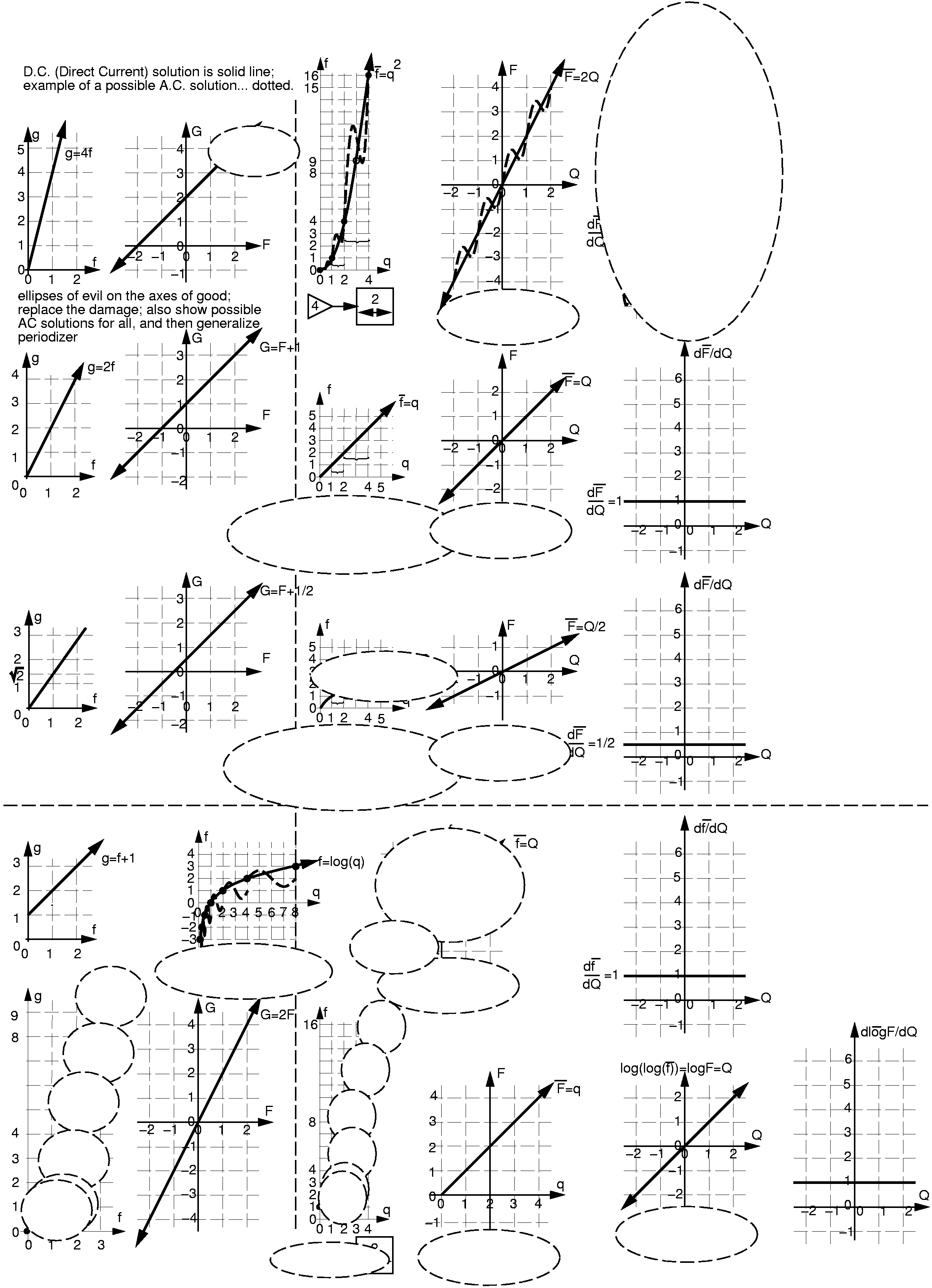

Assignment 4 for 2015: Calibrate your 1-pixel camera as described in lecture Monday 2015feb09. See "Photocell experiment" below. In particular, create a comparagraph of f(kq) vs. f(q), with well-labelled axes, data points and rigourously defined variables. Take one data set while trying to exactly double/halve the quantity of light, and graph it. Graph another data set while changing the quantity of light by steps in a different ratio. How would you fit a function to this relationship? Is it possible to figure out the original function f(q) v.s. q? What does this represent?

Bonus marks for doing this with AC (alternating current) signals and quadrature detection (e.g. building an oscillator and detector circuit). See University of Colorado, Phyics 3340 for example.

We also have some wave analyzers as well as the SR510 lock-in amplifier available.Bonus marks still available for feedbackography, but this time, let's "raise the bar" a bit (in fairness to those who got this working last time) and get the feedback extending over a further range (e.g. greater distance from the camera with a good visible image). See an example here, and also here's some info on animations in .gif images.

Inventrepreneurship:

S. Mann's role as the Chief Scientist at Rotman School of Management's Creative Destruction Lab brings us a series of inventions we can learn from and work with; ask Prof. Mann for the web link URL. (Click for more examples of instructor's contributions)

(Click for more examples of instructor's contributions)

ECE516 (formerly known as ECE1766), since 1998 (2015 is its 18th year)

Teaching assistants:- Ryan Janzen: EA302

- Raymond Lo (back-and-forth between here and Meta in Silicon Valley, California).

Schedule for January 2015:

Hour-long lectures starting Mon 5pm, Wed. 5pm, and Thursday 3pm in WB119 (Wallberg Building, Room 119)

Lab: Fri. 12noon - 3pm, lab, BA3135 or EA302 or alternate location depending on subject of lab Important dates:- Mon2015jan05: first ECE516 lecture (link);

- Sun2015jan18: last day to add courses for engineering undergraduates;

- 2015jan14: last day for ECE graduate students to submit their add form;

- 2015jan19: last day for ECE graduate students to add a course on ROSI;

- 2015feb23: last day for ECE graduate students to drop a course.

- 2015mar08: last day for undergradute engineering students to drop a course.

- 2015jan19: last day for ECE graduate students to add a course on ROSI;

- 2015apr10 = last day of classes

- 2015apr14-29 = exams

- For CS grad students, see http://web.cs.toronto.edu/program/currentgradstudents/gradprogram/2014-15courselisttimetable.htm

2015 Exam schedule; for the latest, check http://www.apsc.utoronto.ca/timetable/fes.aspx

2014's exam was:ECE516H1 Intelligent Image Processing Type:X, Date: Apr 29, 2014 Time:09:30 AM BA-2175

Course structure of previous years; will be customized to meet the interests of those enrolled each year, 2015:

Each year I restructure the course in order to match the interests of the students enrolled, as well as to capture opportunities of new developments.Final exam schedule is usually announced on this website: here, toward the end of the term.

Links to some useful materials

- Patent for "stitching together" pictures to make a panorama

- HDR (High Dynamic Range) imaging patent

- "The first report of digitally combining multiple pictures of the same scene to improve dynamic range appears to be Mann. [Mann 1993]" in "Estimation-theoretic approach to dynamic range enhancement using multiple exposures" by Robertson etal, JEI 12(2), p220, right column, line 26

- Local cache of Atmega 2560 summary and data sheet

- Free Arduino+Linux eBook,

local

cache here.

Author of the book mentions ECE516 course

instructor Steve Mann:

"Manoel lives in California with his wife and children. He admires Dr. Steve Mann, who is considered the real father of wearable computers, and David Rolfe, a notable Assembler programmer who created classic arcade games in the 1980s.", Page xix

- Neurosky EEG brainwave chip and board

- Mindset Communications Protocol

- Lacy Jewellery Supply, 69 Queen Street, East, 2nd Floor, Toronto, Ontario M5C 1R8 CANADA 416-365-1375 1-800-387-4466

- Photocell experiment.

- Read and understand this Derivatives Of Displacement article, and if this article is temporarily unavailable, you can find a cache of it here.

- Read and understand these examples on absement.

- Differently exposed images of the same subject matter.

- sousveillance in the news...

- Here's what happens when you try and use a hand-held magnifier at the supermarket; better to put seeing aids in eyeglasses...

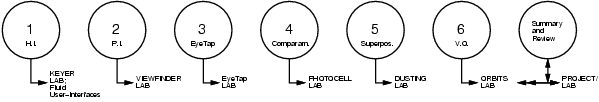

Course "roadmap" by lab units and corresponding book chapters:

PDF; PostScript (idraw)Labs were organized according to these six units (the first unit on KEYER, etc., includes more than one lab, because there is some intro material, background, getting started, etc.).

Organization of the course usually follows the six chapters in the course TEXTBOOK, but if you are interested in other material please bring this to the attention of the course instructor or TA and we'll try and incorporate your interests into the course design.

location of this course textbook in university of toronto bookstore:

Kevin reported as follows:I just stopped by the UofT Bookstore, and to help the rest of the students, I thought you could announce that the book is located in the engineering aisle, and exactly to the left of the bookstore computer terminal behind some Investment Science books.

Course summary:

The course provides the student with the fundamental knowledge needed in the rapidly growing field of Personal Cybernetics ("minds and machines", e.g. mind-machine interfaces, etc.) and Personal Intelligent Image Processing. These topics are often referred to colloquially as ``Wearable Computing'', ``Personal Technologies'', ``Mobile Multimedia'', etc..The course focuses on the future of computing and what will become the most important aspects of truly personal computation and communication. Very quickly we are witnessing a merging of communications devices (such as portable telephones) with computational devices (personal organizers, personal computers, etc.).

The focus of this course is on the specific and fundamental aspects of visual interfaces that will have greatest relevence and impact, namely the notion of a computationally mediated reality, as well as related topics such as Digital Eye Glass, brain-computer interfaces (BCI), etc., as explored in collaboration with some of our startups, such as Meta, and InteraXon, a spinoff company started by former students from this course.

A computationally mediated reality is a natural extension of next--generation computing. In particular, we have witnessed a pivotal shift from mainframe computers to the personal/personalizable computers owned and operated by individual end users. We have also witnessed a fundamental change in the nature of computing from large mathematical calculations, to the use of computers primarily as a communications medium. The explosive growth of the Internet, and more recently, the World Wide Web, is a harbinger of what will evolve into a completely computer--mediated world in which all aspects of life, not just cyberspace, will be online and connected by visually based content and visual reality user interfaces.

This transformation in the way we think and communicate will not be the result of so--called ubiquitous computing (microprocessors in everything around us). Instead of the current vision of ``smart floors'', ``smart lightswitches'', ``smart toilets'', in ``smart buildings'' that watch us and respond to our actions, what we will witness is the emergence of ``smart people'' --- intelligence attached to people, not just to buildings.

This gives rise to what Ray Kurzweil (Chief Enginering of Google), Marvin Minsky (Father of Artificial Intelligence), and I refer to as the "Sensory Singularity".

And this will be done, not by implanting devices into the brain, but, rather, simply by non--invasively ``tapping'' the highest bandwidth ``pipe'' into the brain, namely the eye. This so--called ``eye tap'' forms the basis for devices that are currently built into eyeglasses (prototypes are also being built into contact lenses) to tap into the mind's eye.

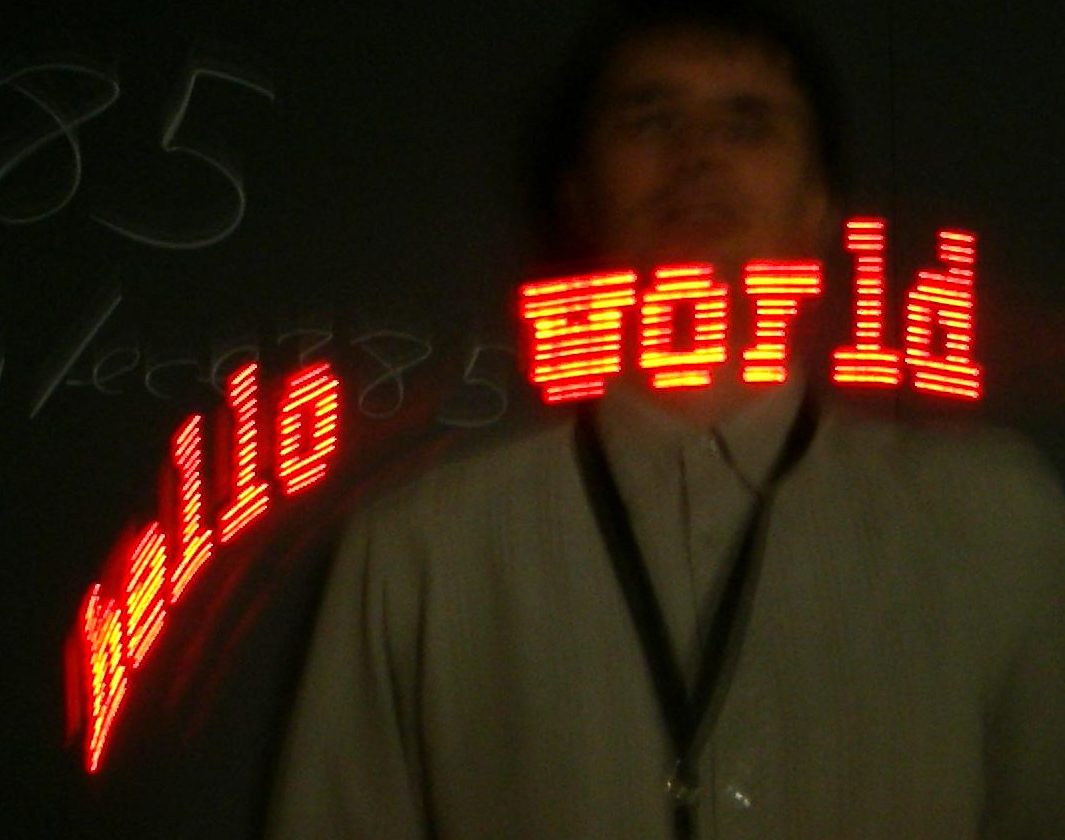

EyeTap technology causes inanimate objects to suddently come to life as nodes on a virtual computer network. For example, while walking past an old building, the building may come to life with hyperlinks on its surface, even though the building is not wired for network connections in any way. These hyperlinks are merely a shared imagined reality that wearers of the EyeTap technology simultaneously experience. When entering a grocery store, a milk carton may come to life, with a unique message from a spouse, reminding the wearer of the EyeTap technology to pick up some milk on the way home from work.

EyeTap technology is not merely about a computer screen inside eyeglasses, but, rather, it's about enabling what is, in effect, a shared telepathic experience connecting multiple individuals together in a collective consciousness.

EyeTap technology will have many commercial applications, and emerge as one of the most industrially relevant forms of communications technology. The WearTel (TM) phone, for example, uses EyeTap technology to allow individuals to see each other's point of view. Traditional videoconferencing merely provides a picture of the other person. But most of the time we call people we already know, so it is far more useful for use to exchange points of view. Therefore, the miniature laser light source inside the WearTel eyeglass--based phone scans across the retinas of both parties and swaps the image information, so that each person sees what the other person is looking at. The WearTel phone, in effect, let's someone ``be you'', rather than just ``see you''. By letting others put themselves in your shoes and see the world from your point of view, a very powerful communications medium results.

The course includes iPhone and Android phone technologies, and eyeglass-based "eyePhone" hybrids.

Text:

- Intelligent Imaging Processing (published by John Wiley and Sons, November 2, 2001)

- online materials and online examples, using the GNU Octave program, as well as other parts of the GNU Linux operating system (most of us use Ubuntu, Debian, or the like).

- additional optional referencess: publications, readings, etc., that might be of interest.

Organization of the textbook

The course will follow very closely to the textbook which is organized into these six chapters:- Personal Cybernetics: The first chapter introduces the general ideas of ``Wearable Computing'', personal technologies, etc. See http://wearcam.org/hi.htm.

- Personal Imaging: (cameras getting smaller and easier to carry), wearing the camera (the instructor's fully functioning XF86 GNUX wristwatch videoconferencing system, http://wearcam.org/wristcam/); wearing the camera in an "always ready" state

- Mediated Reality and the EyeTap Principle.

- Collinearity criterion:

- The laser EyeTap camera: Tapping the mind's eye: infinite depth of focus

- Contact lens displays, blurry information displays, and vitrionic displays

- Comparametric Equations, Photoquantigraphic Imaging, and comparagraphics (see http://wearcam.org/comparam.htm)

- Lightspace:

- lightvector spaces and anti-homomorphic imaging

- application of personal imaging to the visual arts

- VideoOrbits and algebraic projective geometry (see http://wearcam.org/orbits); Computer Mediated Reality in the real world; Reality Window Manager (RWM).

Other supplemental material

- Chording keyer (input device) for wearable/portable computing or personal multimedia environment

- fluid user interfaces

- previously published paper on fluid user interfaces

- University of Ottawa: Cyborg Law course See, also, the University of Ottawa site, and article on legal and philosophical aspects of Intelligent Image Processing

- photocell experiment

- "Recording 'Lightspace' so shadows and highlights vary with varying viewing illumination", Optics Letters, Vol. 20, Iss. 4, 1995 ("margoloh")

- Example from previous year's work: data from final lab, year 2005: lightvectors and lightspace (See readme.txt file).

Lecture, lab, and tutorial schedule from previous years

Here is an example schedule from a previous year the course was taught.Each year we modify the schedule to keep current with the latest research as well as with the interests of the participants in the course. If you have anything you find particularly interesting, let us know and we'll consider working it into the schedule...

- Week1 (Tue. Jan. 4 and Wed. Jan. 5th):

Humanistic Intelligence for Intelligent Image Processing

Humanistic User Interfaces, e.g. "LiqUIface" and other novel inputs that have the human being in the feedback loop of a computational process. - Week2: Personal Imaging; concomitant cover activity and VideoClips; Wristwatch videophone; Telepointer, metaphor-free computing, and Direct User Interfaces.

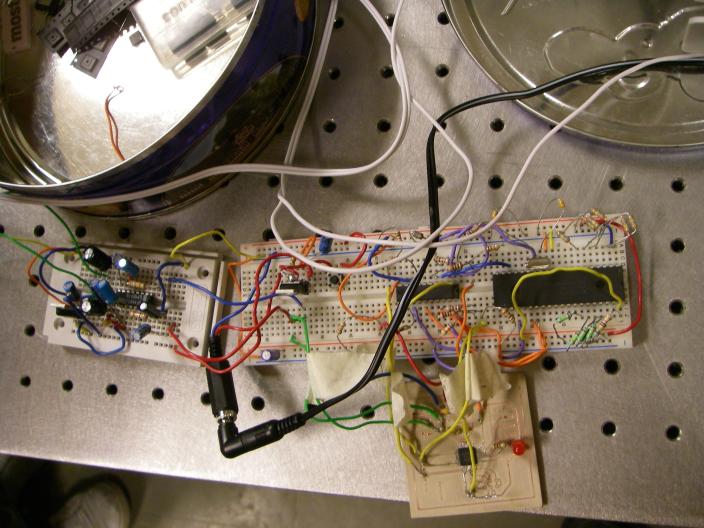

- Week3: Atmel AVR, handout of circuitboards for keyers, etc.; wiring instructions are now on this www site at http://wearcam.org/septambic/

- Week4: EyeTap part1; technology that causes the eye itself to function as if it were both a camera and display; collinearity criterion; Calibration of EyeTap systems; Human factors and user studies.

- Week5: Eyetap part2; Blurry information displays; Laser Eyetap; Vitrionics (electronics in glass); Vitrionic contact lenses.

- Week6: Comparametric Equations part1.

- Week7: READING WEEK: NO LECTURE THIS WEEK

- Week8: Comparametric Equations part2.

- Week9: Comparametric Equations part3. http://eyetap.org/ece1766/.

- Week10: Lightspace and anti-homomorphic vectorspaces.

- Week11: VideoOrbits, part1; background

- PDC intensive course may also be offered around this time;

- Week12: VideoOrbits, part2; Reality Window Manager (RWM); Mediated Reality; Augmented Reality in industrial applications; Visual Filters; topics for further research (graduate studies and industrial opportunities).

- Week13; review for final exam;

- Final Exam: standard time frame usually sometime between around mid April and the end of April.

Course Evaluation (grading):

- Closely Supervised Work: 40% (in-lab reports, testing, participation, field trials, etc.).

- Not Closely Supervised Work: 25% (take-home assignments or the portion of lab reports done at home)

- Final exam: 35%. Exam is open book, open calculator, but closed network connection.

This course was originally offered as ECE1766; you can see previous version (origins of the course), http://wearcam.org/ece1766.htm for info from previous years.Resources and info:

- AVR info

- avr_kit.tar (shell script that installs GCC AVR and SP12 for those of you using the parallel port approach...)

- Example from ECE385:

Supplemental material:

- the cyborGLOGGER community at http://glogger.mobi

- the EyeTap site, at www.eyetap.org.org

- related concepts like sousveillance, and collective intelligence...

- here's an interesting article about decentralization

- "the electronic device owned now by the most college students is the cell phone. Close behind is the laptop."

- A new name for cyborglogging and sousveillance: "lifecasting".

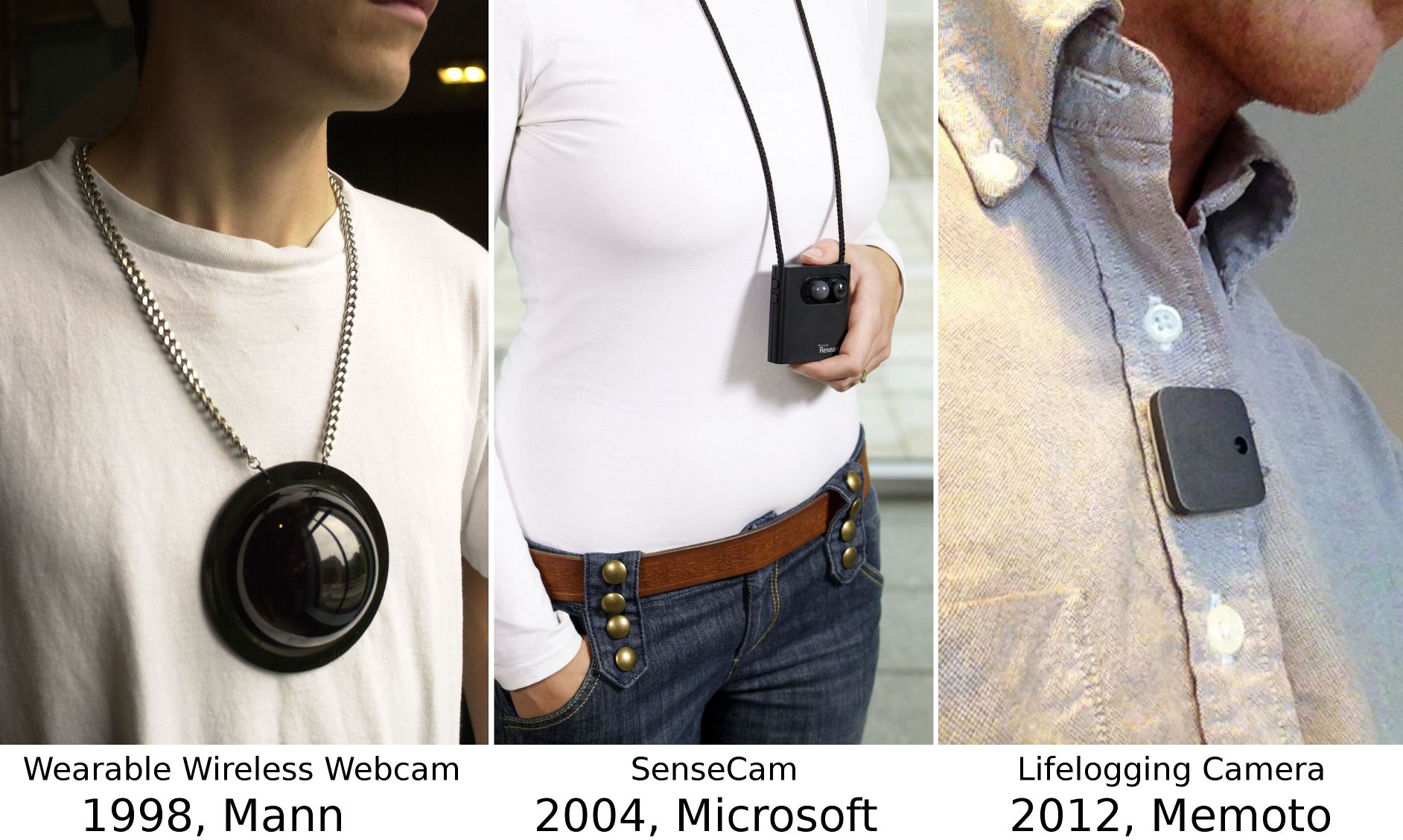

- Microsoft's interest in our work

Above: one of our neckworn sensory cameras, designed and built at University of Toronto, 1998, which later formed the basis for Microsoft's sensecam.

CyborGLOG of Lectures from previous year

(...on sabbatical 2009, so last year the course was not offered. Therefore, the must up-to-date previous course CyborGLOG was from 2008.)- Tue 2008 Jan 08:

My eyeglasses were recently broken (damaged) when I fell into a live three-phase power distribution station that was for some strange reason setup on a public sidewalk by a film production company. As a result, my eyeglasses are not working to well. Here is a poor quality but still somewhat useful (understandable) capture of the

lecture as transmitted live (archive) (please forgive the poor eyesight resulting from temporary replacement eyewear).Another point of view is here.

- Thu 2008 Jan 10:

- Tu 2008 Jan 15:

- Th 2008 Jan 17:

- Tu 2008 Jan 22: lecture

- Th 2008 Jan 24: lecture

- Tu 2008 Jan 29: lecture

- Th 2008 Jan 31: walking to lecture with Ryan's presentation on AVR

- Tu 2008 Feb 05: lecture

- Th 2008 Feb 07: lecture

- Tu 2008 Feb 12: lecture

- Th 2008 Feb 14: lecture

- 2008 Feb 18-22: reading week, no lectures or labs

- Tu 2008 Feb 26: lecture

- Th 2008 Feb 28: lecture

- Tu 2008 Mar 04: lecture

- Th 2008 Mar 06: lecture

- Tu 2008 Mar 11: lecture

- Th 2008 Mar 13: lecture

- Tu 2008 Mar 18: lecture

- Th 2008 Mar 20: lecture

- Tu 2008 Mar 25: lecture

- Th 2008 Mar 27: lecture

- Tu 2008 Apr 01: lecture

- Th 2008 Apr 03: lecture

CyborGLOG of Labs

- Fr 2008 January 18th:

- Fr 2008 January 25:

- Fr 2008 February 01: Snow storm; univeristy officially closed but I still made myself available to people who came and wanted some help:

- Fr 2008 February 08:

- Fr 2008 February 29:

- Fr 2008 March 07:

- Fr 2008 March 14: Individual group evaluations (not in the public 'glog)

- Fr 2008 March 21: Good Friday (now lab)

- Fr 2008 March 28: lab

Other background readings:

- photocell experiment

- Computational photography

- Candocia's research

- Candocia's paper in

- some of these papers show up also in scientific commons, e.g. take a look at the second paper listed in scientic commons, or any of the other papers on comparametrics, or range-range estimates.

- background estimation under rapid gain change

Christina Mann's fun guide: How to fix things, drill holes, install binding posts, and solder wires to terminals

Material from year 2007:

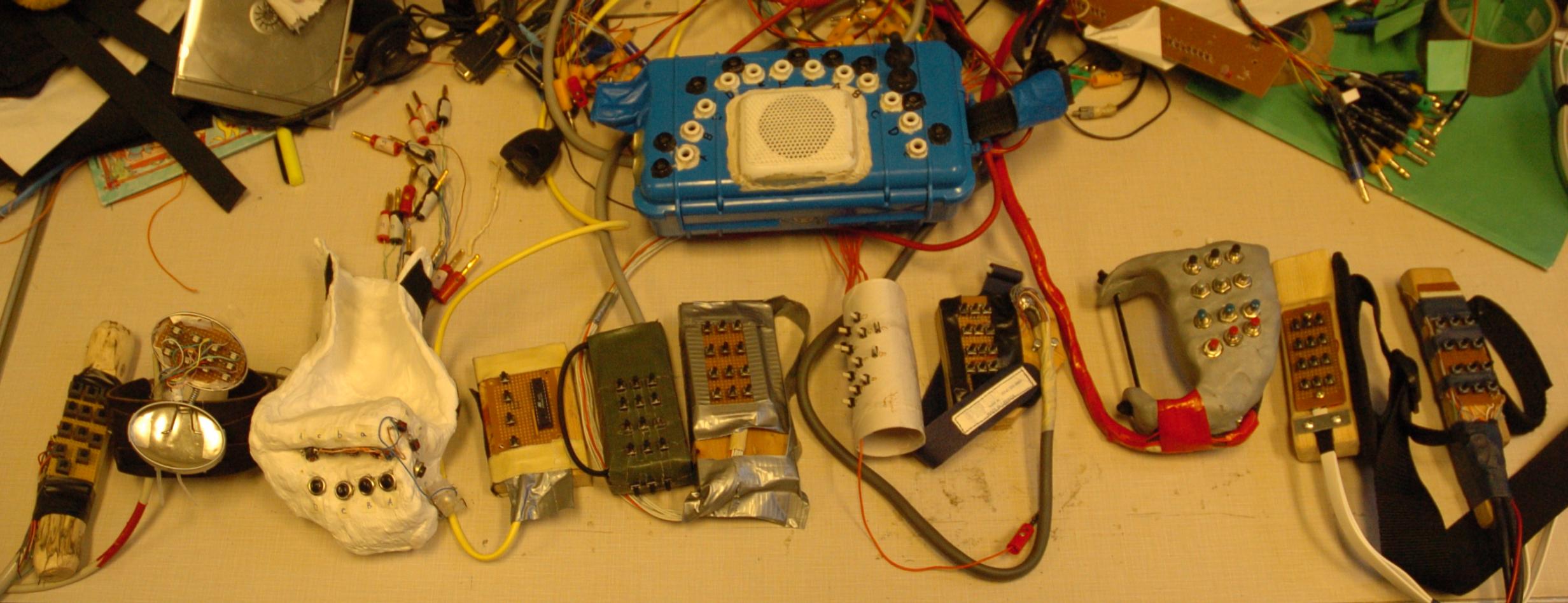

Lab 2007-0: Demonstration of an analog keyboard

Example of analog keyboard; continuous fluidly varying input space:

Lab 2007-1, Chapter 1 of textbook: Humanistic Intelligence

In lab 1 you will demonstrate your understanding of Humanistic Intelligence, either by making a keyer, or by programming an existing keyer, so that you can learn the overall concept.Choose one of:

- Build a keyer that can be used to control a computer, camera, music player, or other multimedia device. Your keyer can be modeled after the standard Twiddler layout. You can take a look at http://wearcam.org/ece516/musikeyer.htm to get a rough sense of what our typical keyers look like. See also pictures from last year's class, of various keyers that were made, along with the critiques given of each one. Your keyer should have 13 banana plugs on it: one common and 12 others, one of these 12 for each key. If you choose this option, your keyer will be graded for overall engineering (as a crude and simple prototype), ergonomics, functionality, and design.

- Modify one or more of the programs on an existing keyer to demonstrate your understanding of these keyers. Many of our existing keyers use the standard Twiddler layout, and are programmed using the C programming language to program one or more Atmel ATMEGA 48 or ATMEGA 88 microcontrollers. If you choose this project, please contact Prof. Mann or T.A. Ryan Janzen, to get a copy of the C program, and to discuss a suitable modification that demonstrates your understanding of keyers.

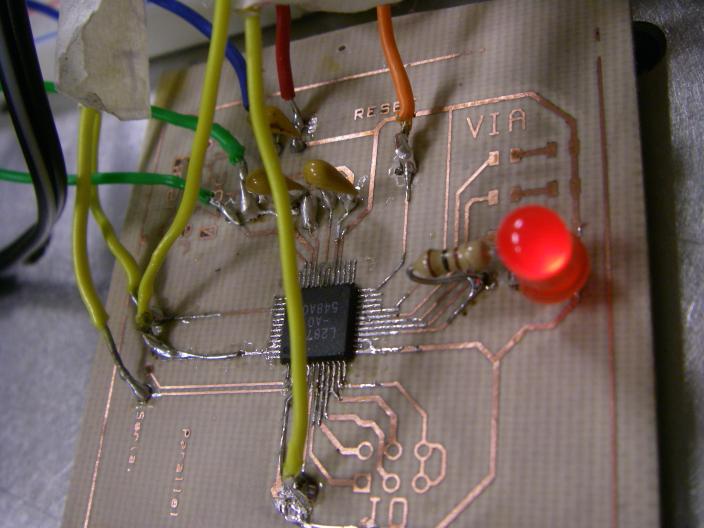

- Assemble a "blueboard" (we supply printed circuit board and pre-programmed chips). Should have experience soldering DIPs, etc.. The blueboard is the standard interface for most wearable computers and personal cybernetics equipment, and features 15 analog inputs for 12 finger keys and 3 thumb keys. If you choose this option, please contact Prof. Mann or T.A. Ryan Janzen, to determine materials needed.

- Implement a bandpass filter. You can do this using a suitable personal computer that has a sound card, that can record and play sound (at the same time). You can write a computer program yourself, or find an existing program written by someone else that you can use. Your filter should monitor a microphone input, filter it, and send the output to a speaker. The filter should be good enough that it can be set to a particular frequency, for example, 440Hz, and block sounds at all but that frequency. Any sound going into the microphone should be audible at that specific frequency only, i.e. you should notice a musical or tonal characteristic in the output when the input is driven with arbitrary or random sound.

Lab 1 results:

- Greg's bandpass filter was based on CLAM

- JAMES: perfboard-based keyer

- MOHAMAD's bandpass filter based on FPGA

- Jono (Jonathan, Iceman)'s keyer

- David's blueboard interface/adapter

- PENG's keyer

- BRYAN's keyer, considering foam pipe

- Anthony's keyer on perfboard, with ribbon cable

- Rachenne made keyer on perfboard, with the 13 plugs.

- Philip's pipe-based keyer

OKI Melody 2870A spec sheet

The OKI Melody 2870A spec sheet is here.Lab 2007-2, Chapter 2 of textbook: Eyeglass-based display device

In this lab we will build a simple eyeglass-based display device, having a limited number of pixels, in order to understand the concept of eyeglass-based displays and viewfinders.This display device could function with a wide range of different kinds of wearable computing devices, such as your portable music player.

Lab 2007-3, Chapter 3 of textbook: EyeTap (will also give intro to photocell)

Presentation by James Fung:

Lab 2007-4, Chapter 4 of textbook: Photocell experiment

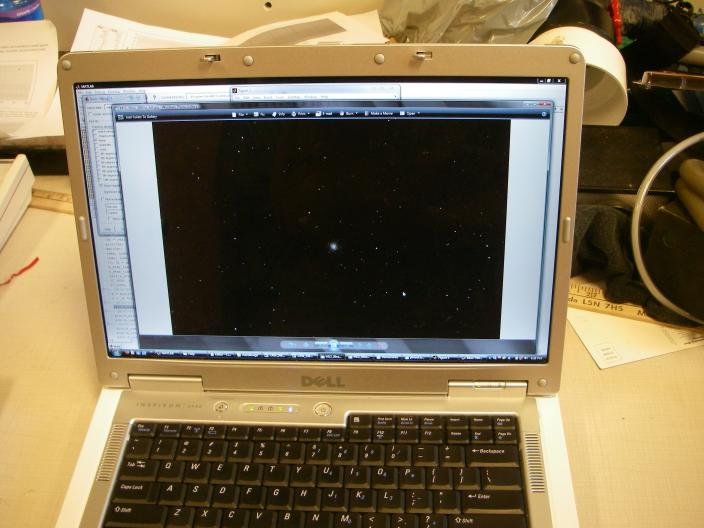

photocell experiment and a recent publication describing it.Today there were two really cool projects that deserve mention in the ECE516 Hall of Fame:

David's comparametic analysis and CEMENTing of telescope images:

Lab 2007-5, Chapter 5 of textbook: Lightvectors

Lab 2007-6 and 7

Final projects: something of your choosing, to show what you've learned so far.No written or otherwise recorded report is required.

However, if you choose to write or record some form of report or other support material, it need not be of a formal nature, but you must, of course, abide by good standards of academic conduct, e.g. any published or submitted material must:

- properly cite reference source material (e.g. where any ideas that you decide to use came from);

- properly cite collaborations with others. You are free to do individual projects or group projects, and free to discuss and collaborate even if doing individual projects, but must cite other partners, collaborators, etc..

It is expected that all sudents will have read and agree to the terms of proper academic conduct. This usually happens and is introduced in first year, but for anyone who happens to have missed it in earlier years, it's here: How Not to Plagiarize. It's written mainly to apply to writing, but the ethical concept is equally applicable to presentations, ideas, and any other representation of work, research, or the like.

Year 2006 info:

Keyer evauation is posted:

Lab 2

EyeTap lab: Explanation of how eyetap works; demonstration of eyetap; demonstration of OPENvidia.

C.E.M.E.N.T. lab

Comparametrics lab: Recover the damage done by the Elipses of Evil, on the Axes of Good:

- Module 2, Personal Imaging:

IEEE ISTAS.

IEEE ISTAS.