Please read Chapter 1 before lab 1 and try to formulate some questions about anything you don't understand.

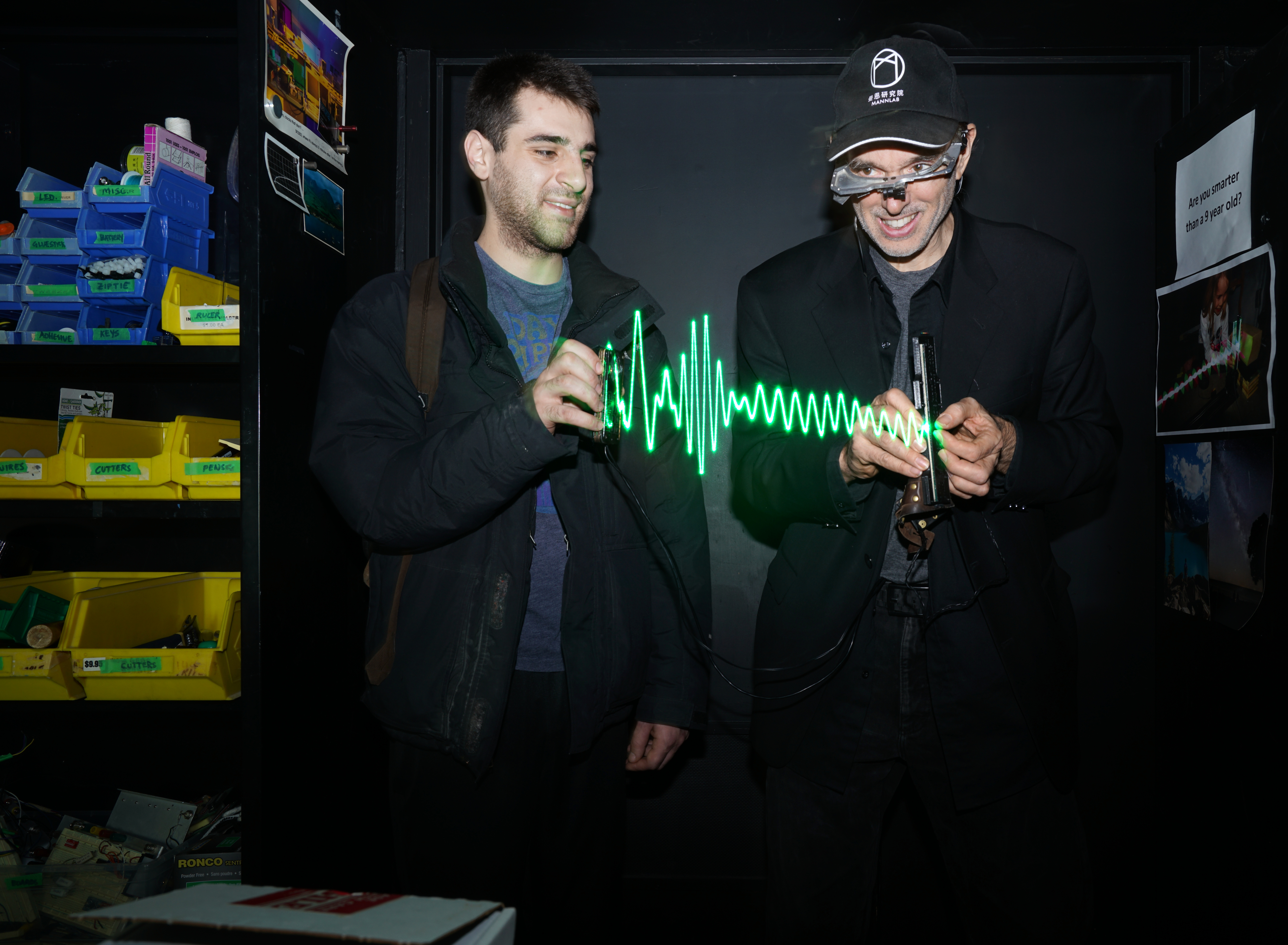

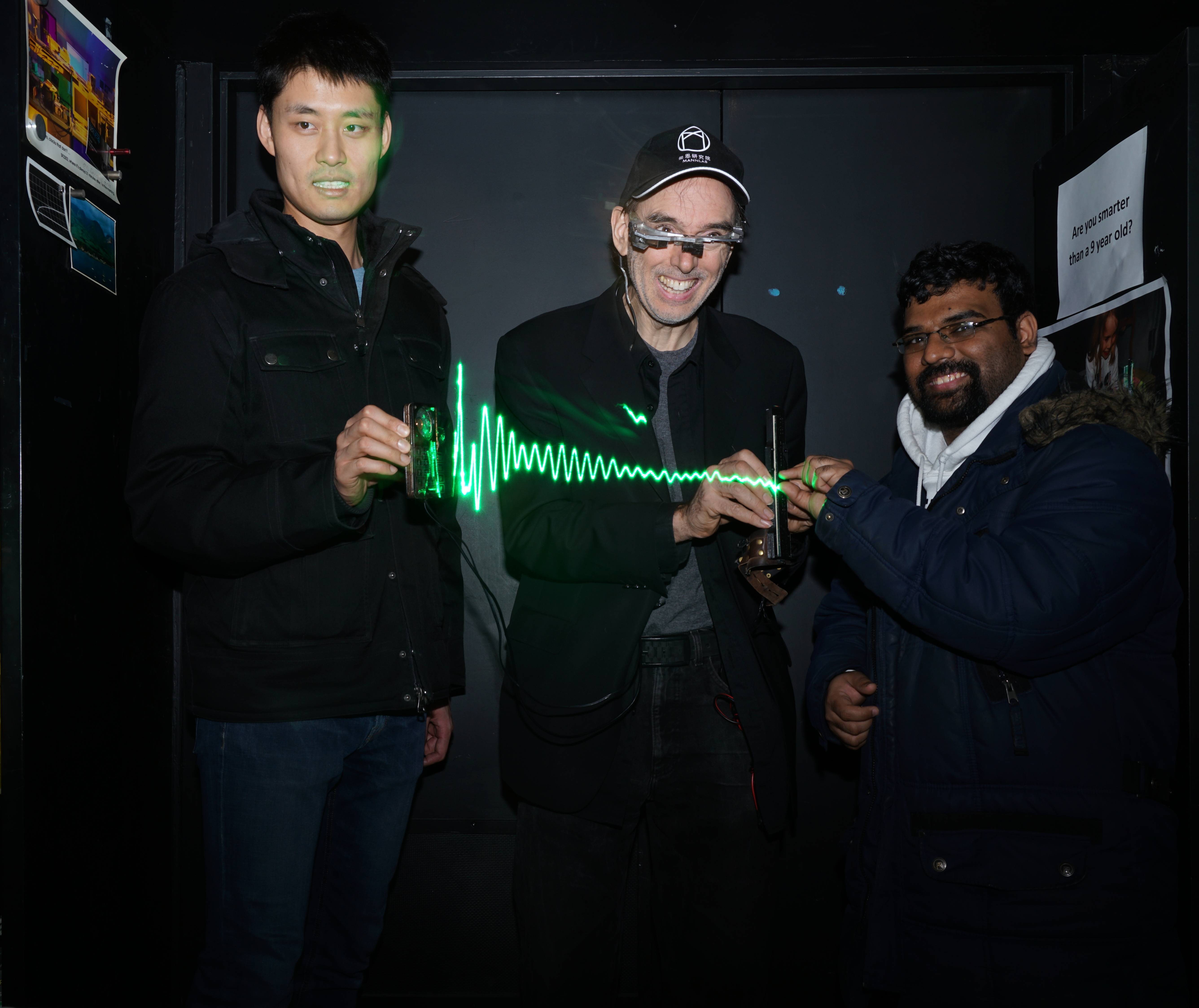

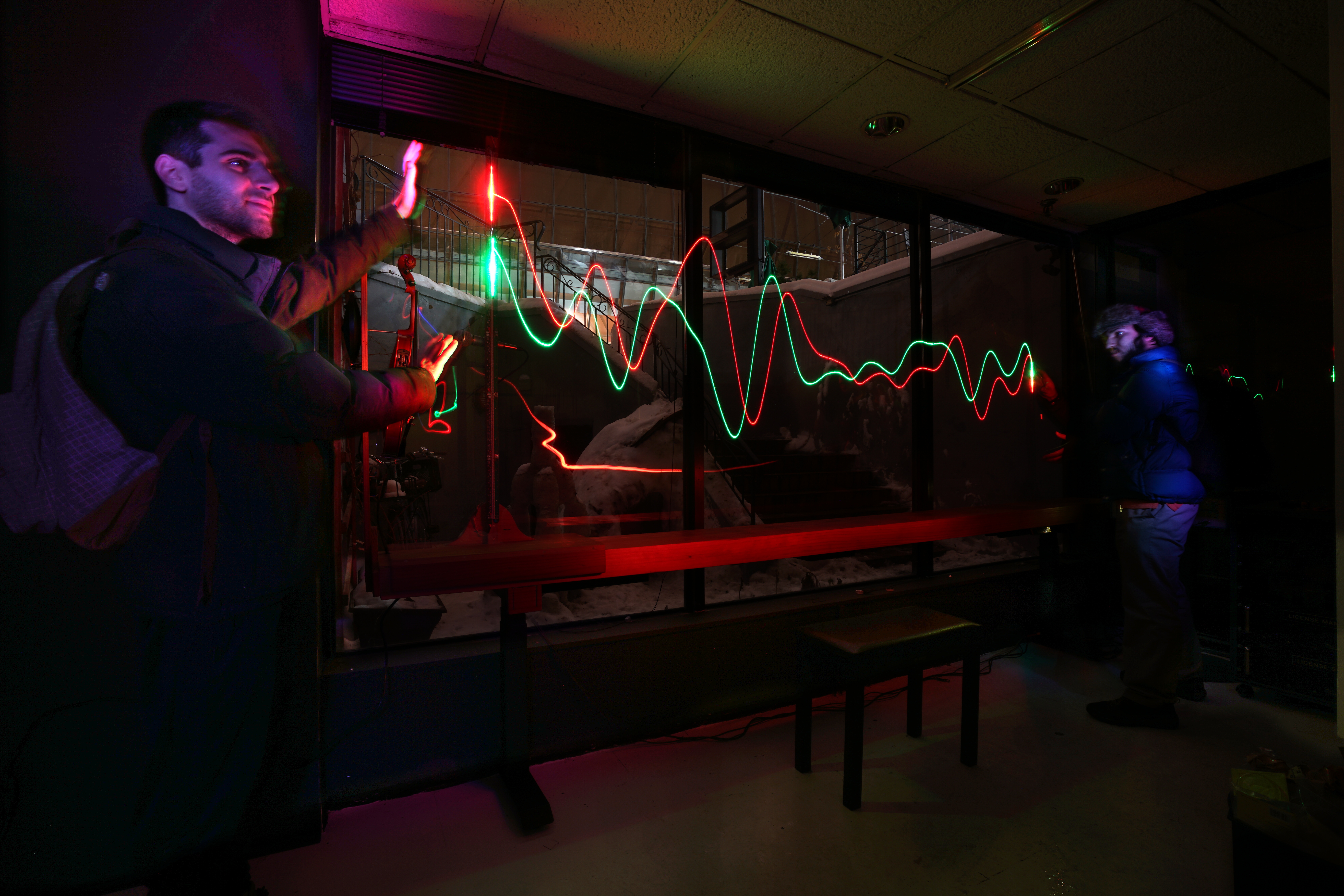

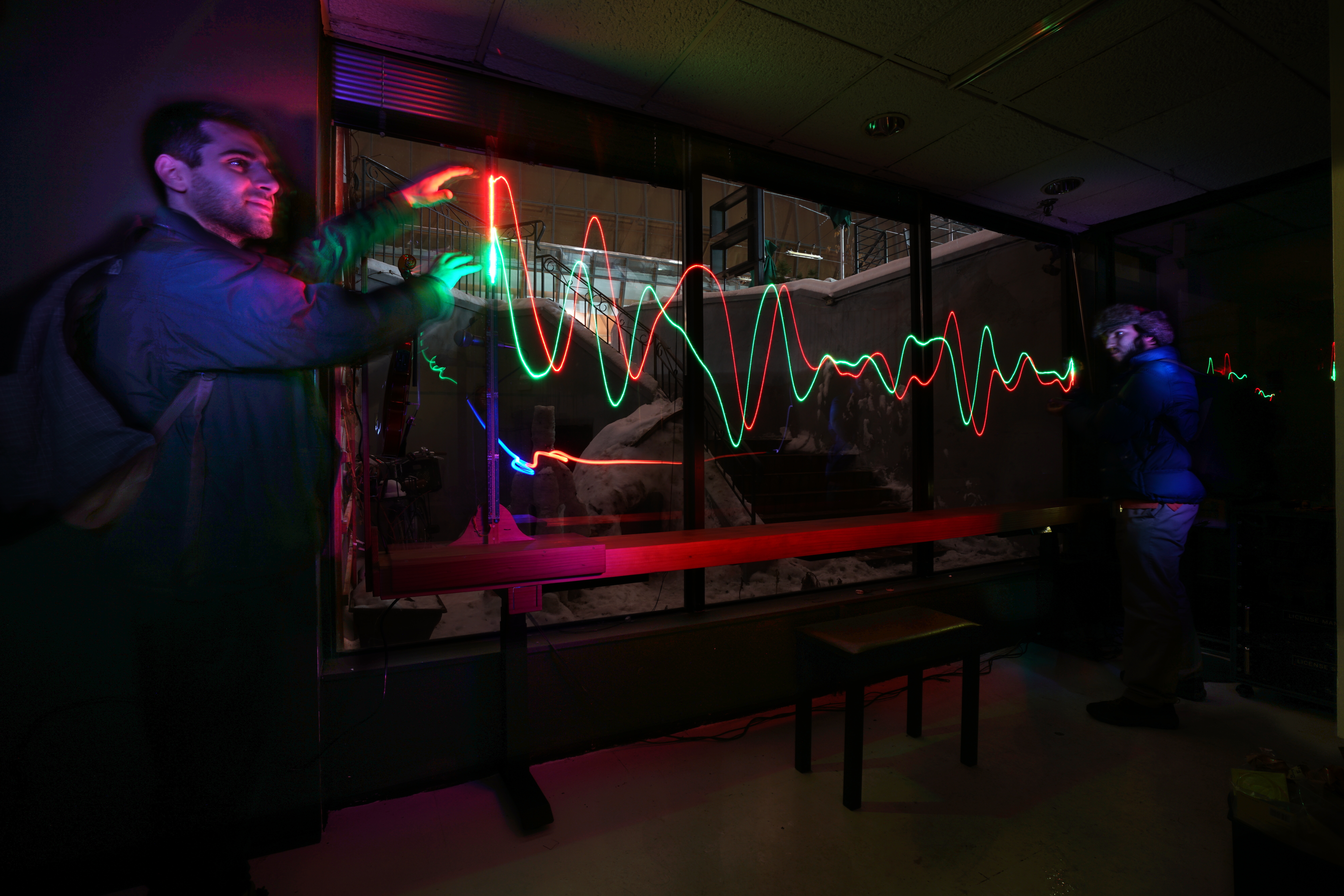

Augmented Reality robotics (ARbotics) allows us to see the effects of hand movement on the sound waves from a robotically actuated violin. Notice how moving the hands in the vicinity of the sound waves has a slight but noticeable effect on them. Sound waves bounce off objects in the vicinity, and we can see and understand the patterns they make when they bounce off various objects.

Interactive Augmented and Virtual Reality robotics for AI, and HI = HuMachine Learning.

Reading assignment: start with equation 1 on page 1411 of this paper.