As machines are more intricately woven into the fabric of our everyday life, machine intelligence presents existential risks to humankind [Markoff 2015, Bostrom 2014], and we must guard against these risks. But survival of the human race is not enough. We need to ensure we're not living a degraded and debased life under the surveillance of intelligent machines that want to know everything about us and yet reveal nothing about themselves. Take for example television. Early television sets tried to display a picture no matter how bad the signal: It simply did its best to show us a picture at all times, even if that picture was flawed or contained some random noise or optical "snow". A modern television receiver only allows its owner to see a picture if the television "decides" it is crisp enough to be approved for viewing. Otherwise, the TV set just displays a blue screen with the message "No Signal". Without continuous feedback, we can't move the antenna a little bit, or wiggle the wires, or quickly try different settings and inputs, to see what improves the picture. Machines have become like great gods, made perfect for worship, and are no longer showing us their flaws that used to allow us to understand them. In a sense the human has been taken out of the feedback loop, and we no longer get to see (and learn from) the relationship between cause (e.g. the position of a TV antenna or wiring) and effect (e.g. the subtle variation in picture quality that used to vary continuously with varying degree of connectivity).

Footnote: An old television responded within about a thousandth of a second to an input signal, whereas modern televisions wait a few seconds before displaying the signal [Sledgehammer Awards, IEEE GEM2015] == thousands of times less feedback bandwidth. The issue here is not whether it is digital or analog == either standard can support graceful degredation and quick responsive feedback. The issue here is decisions that were made by those who designed these systems. Television is just one of many examples of this problem. Consider also the Apple MacBook power supply transformer which has a yellow or green light to indicate power. When you plug it in, you have to wait a few seconds before you see whether or not it is connected. In this way you can't wiggle a loose cable to rapidly locate poor connections.

More seriously, as our computers, web browsers, and other "things", become filled with opaque and mysterious operating systems that claim to be helpful, they actually reveal less about how they work. Like the television with "No Signal", when our web browser says "Page not found", in a world of secret technologies, we now know less and less about how our sources of information might be corrupted. Increasingly, the human element in our democracy -- public opinion -- can be manipulated, without the public knowing why. It all comes back to a simple question: whether we allow technology to be opaque and closed-source, or whether we force it to have the openness of integrity.

The same opacity and lack of integrity affect electronic voting, end-of-life sensors (How do we know if the end-of-life sensor is working accurately, or if its AI engine is governed by some other unknown criteria?), as well as power grid management, stock-trading AI, warfare situational awareness, libor and other bigger impacts of complex systems that, while beyond the comprehension of any single person, have an immediate impact on our lives, where big AI could make us feel like an ant being burned by a 5 year old with a magnifying glass.

We are at a precipice where NO human or group of humans can understand the underlying systems that run our world. This lack of comprehensibility (observability) becomes greatly compounded with neural nets, self modifying code, quantum computing, etc.. The issues we face today are not likely going to lead to a Chernobyl-like event, but next generation code/systems have that potential, because of their black-hole-like nature. Once a few billion sensory IoT observations drop into a multi-layer neural net or quantum calculation, there we run the risk of having no method for transparency (Observability) of any kind.

In all of these, what we've lost is something called "observability", which is a measure of how well the internal states of a machine can be inferred from its external outputs [Kalman 1960].

Machines have inputs and outputs, as do humans. Together that's four in/out paths: Machine in; Machine out; Human in; Human out. But when the human and machine operate together with feedback, there is also Observability and Controllability, which add two more, for a total of six paths, giving rise to a concept called Humanistic Intelligence:

“

Humanistic Intelligence [HI] is intelligence that arises because of a human being in the feedback loop of a computational process, where the human and computer are inextricably intertwined. When a wearable computer embodies HI and becomes so technologically advanced that its intelligence matches our own biological brain, something much more powerful emerges from this synergy that gives rise to superhuman intelligence within the single "cyborg" being. ”

--Kurzweil, Minsky, and Mann, "The Society of Intelligent Veillance" IEEE ISTAS 2013.

Let's assume for the moment that the machines are (or soon will be) more powerful than we are. Such a scenario makes controllability a form of surveillance (oversight, i.e. the machine sensing and knowing us) and observability a form of sousveillance (undersight, i.e. us sensing and knowing the machine).

For HI to exist, requires all six, but most of today's machines, whether they support AI (Artificial Intelligence) or IA (Intelligence Augmentation) only properly support five of the six. Most machines, like the modern television, or the modern computer, are pretty good at implementing controllability, but quite poor at implementing observability. They can watch us quite well. Modern televisions and computers have cameras in them that can see us and recognize our facial expressions and hand gestures. And they know everything about us. But we can't see them or know very much about them.

As we build machines that take on human qualities, will they become machines of integrity and loving grace == machines that have the capacity to love and be loved, or will they become machines of hypocrisy == one-sided machines that can't return the love and trust we give to them. If machines are going to be our friends, and if the machines are going to win our trust, then they also have to trust us. Trust is a 2-way street. So if the machines don't trust us and if the machines refuse to show their flaws to us, then we can't trust them. Machines need to show us their imperfections and their raw state, like a good friend or spouse where you trust each other and show yourselves, e.g. naked or unfinished. If machines are afraid to be seen naked or unfinished, then we should not show ourselves in that state to them. Technology that is not transparent cannot be trusted.

Indeed, when machines want to know everything about us, yet reveal nothing about themselves, that's a form of hypocrisy. You don't have to look to far to see this kind of hypocrisy in regards to sur/sousveillance; here's a picture from our local supermarket:

The opposite of hypocrisy is integrity. Thus machines observing us, and not themselves observable, are machines that lack integrity.

Observability and integrity require responsiveness (quick immediate feedback) and comprehensibility (making machines that are easy to understand). Systems implementing HI help you understand and act in the world, rather than masking out failure modes as do TV standards like HDMI. Indeed we're seeing a backlash against the "machines as gods" dogma, and a resurgence of the "glitchy" raw aspects of quick responsive comprehensible technologies like vacuum tubes, photographic film, and a return to the "steampunk" aesthetic and incandescent light bulbs (transparent technology).

One thought I had too: as the machines get smarter, we seem to be getting stupider. For instance, the average person today vs. say, 100 years ago, is pretty incapable of fixing things in everyday life. Like a car: way back when, the average person could do repairs on the car, but now the car is less mechanical, and more like a computer. You don't fix the broken thing, you just throw it away and order a new part. This might facilitate people becoming more like infants in their day to day life. They don't see themselves as competent to fix or repair their items -- they are more dependent and trusting. Whereas their grandparents or great-grandparents would "lift the hood" and often they could see what might be wrong. They were more willing to rely on their own intelligence or instincts to solve a problem, whereas now we just trust the machine or by extension the corporation, or the government to "fix" our problems for us.I'm using the car as an analogy, but in a sense modern life is "helping" people to be more helpless. Which sets the stage for machines to be our "parents" and us to be the infants -- suckling at the electronic teat for everything we need. LOL. That to me is the real threat. A population of helpless people, trusting that the "machine" will fix everything, and will have their best interests at heart.

Beth Mann, personal correspondence, 2015 December 9th

So instead of ML (Machine Learning), let's go for MLG (Machines Learning/Loving Grace) through HI (Humanistic Intelligence), and in particular, machines that are observable, and thus have integrity.

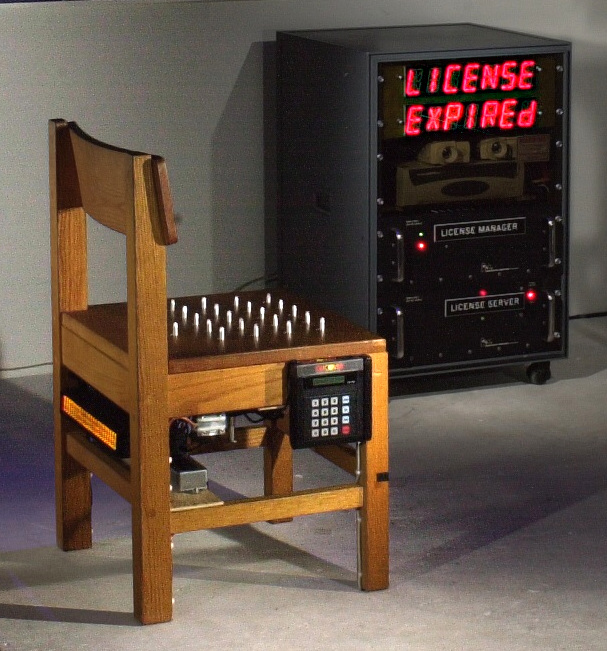

Imagine a machine you rely on everyday like a hearing aid, seeing aid

(eyeglasses), bicycle, automobile, or light switch. Now imagine

giving it TV-like qualities such as region codes and license servers.

You drive across the border and suddenly your car stops working because of a

software region code error. Imagine a chair that's software-based.

Spikes retract when you insert a credit card into the card reader on the

side of the chair, to "download a 1-seat floating license":

(link)

(link)

Or imagine the digital lightswitch of the future. Intead of two distinct

quickly responsive states, "up is on and down is off", we have a single button:

push-on, push-off, with a random three to five second delay, just like HDMI

television. So the machine immediately reads the pushbutton switch but

then waits before it gives feedback on your actions:

Now throw in a 99-percent responsiveness (a mere 1 percent

packet loss), and you can drive those humans insane!